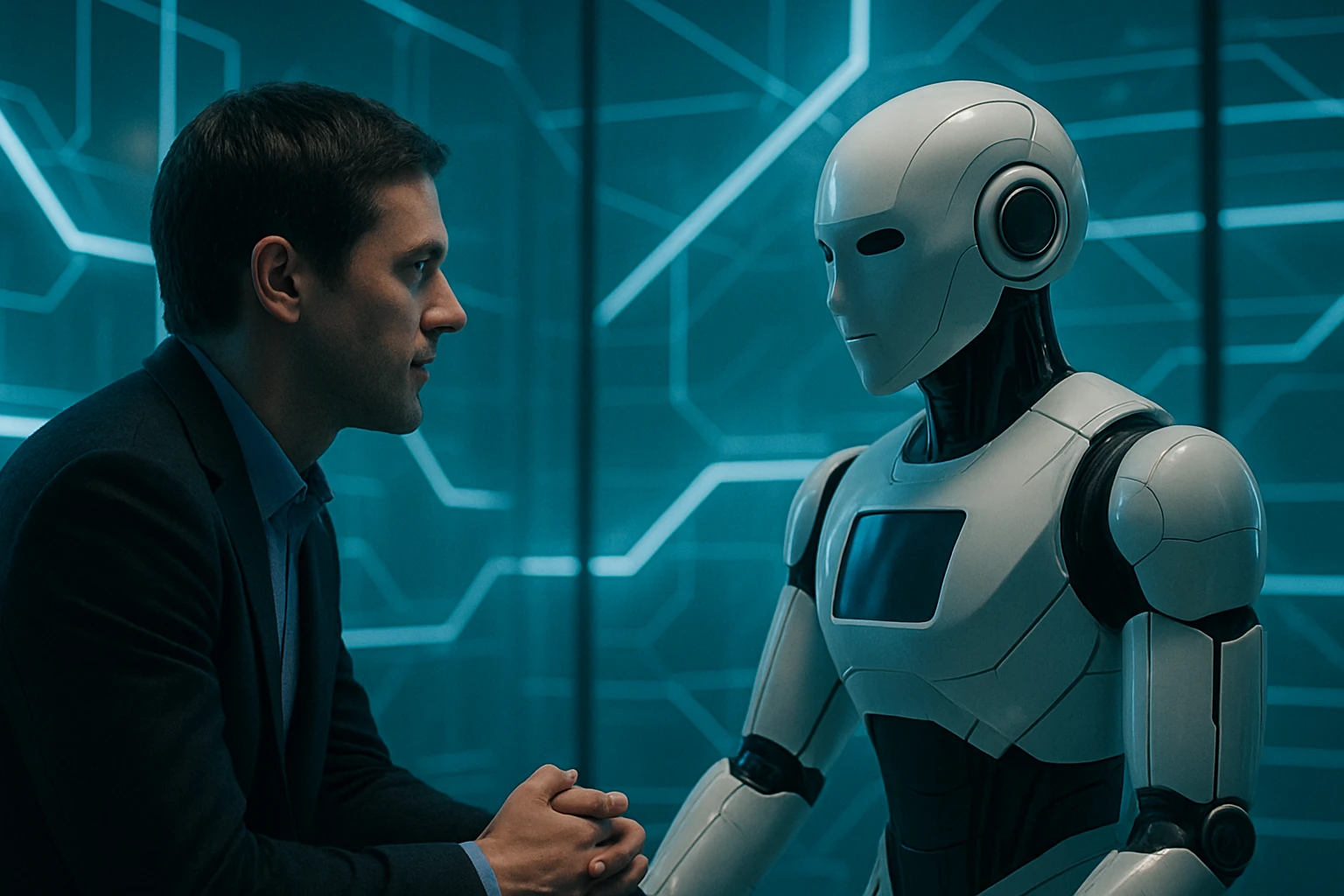

The Conflict between AI and Humans project studies the potential conflicts that may arise between AI systems and human interests. This includes exploring scenarios where AI decisions might clash with human values, ethics, and priorities. The research aims to develop strategies for managing and mitigating such conflicts, ensuring that AI technologies are used in ways that respect and protect human rights.

By examining case studies and engaging with stakeholders across various sectors, the project seeks to create frameworks for harmonious coexistence between AI and humans. The goal is to identify and address potential points of contention, promoting collaboration and understanding between AI systems and the people they serve.